Accessing data via STAC API¶

Libraries needed for this exercise are imported below:

import geogif # render gifs from raster images

import geopandas as gpd # handle geospatial data frames

from IPython.display import Image # visualize URLs

import pandas as pd # data wrangling

import pystac_client # connecting to the STAC API

from rasterio.enums import Resampling # perform resampling operations

import rioxarray # handle spatio-temporal arrays

import shapely # create vector objects

import stackstac # build an on-demand STAC data cube

Querying data with pystac-client¶

STAC stands for SpatioTemporal Asset Catalog and it is "a common language to describe geospatial information, so it can more easily be worked with, indexed, and discovered".

pystac-client allows the querying of a STAC API using Python.

There are several APIs available to query data, you can browse them all in the STAC catalog index. Some of these APIs will require authentication to access the data. We will use the Earth Search catalog for this notebook, which allows querying data on Amazon Web Services (AWS). The data we will fetch does not require authentication.

# STAC API URL

api_url = 'https://earth-search.aws.element84.com/v1'

To start fetching data, we will open the client. We can see the collections available for this API:

client = pystac_client.Client.open(api_url)

for collection in client.get_collections():

print(collection)

<CollectionClient id=cop-dem-glo-30> <CollectionClient id=naip> <CollectionClient id=sentinel-2-l2a> <CollectionClient id=sentinel-2-l1c> <CollectionClient id=landsat-c2-l2> <CollectionClient id=cop-dem-glo-90> <CollectionClient id=sentinel-1-grd>

Let's focus on Sentinel-2 data level 2a. Here are the different levels that Sentinel-2 has. Level 2a provides atmospherically corrected data representing surface reflectance.

# collection ID

collection = 'sentinel-2-l2a'

Let's define now the spatial and temporal extent for our query. We will query all scenes intersecting the point coordinates and the time range given.

# coordinates

lon = 16.9

lat = 52.4

# date range

datetime = '2022-05-01/2022-10-01'

point = shapely.Point(lon, lat)

And we pass these arguments to our search:

search = client.search(

collections=[collection],

intersects=point,

datetime=datetime,

query=["eo:cloud_cover<10"],

)

items = search.item_collection()

len(items)

8

We can view our query as a Geopandas data frame for easier readability:

df = gpd.GeoDataFrame.from_features(items.to_dict(), crs="epsg:4326")

df

| geometry | created | platform | constellation | instruments | eo:cloud_cover | proj:epsg | mgrs:utm_zone | mgrs:latitude_band | mgrs:grid_square | ... | s2:datastrip_id | s2:granule_id | s2:reflectance_conversion_factor | datetime | s2:sequence | earthsearch:s3_path | earthsearch:payload_id | earthsearch:boa_offset_applied | processing:software | updated | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | POLYGON ((16.49848 53.24020, 17.39166 53.22562... | 2022-11-06T13:14:57.270Z | sentinel-2a | sentinel-2 | [msi] | 0.003331 | 32633 | 33 | U | XU | ... | S2A_OPER_MSI_L2A_DS_ATOS_20220826T162059_S2022... | S2A_OPER_MSI_L2A_TL_ATOS_20220826T162059_A0374... | 0.977922 | 2022-08-26T10:16:12.680000Z | 0 | s3://sentinel-cogs/sentinel-s2-l2a-cogs/33/U/X... | roda-sentinel2/workflow-sentinel2-to-stac/bff1... | True | {'sentinel2-to-stac': '0.1.0'} | 2022-11-06T13:14:57.270Z |

| 1 | POLYGON ((16.49848 53.24020, 17.39346 53.22558... | 2022-11-06T12:53:48.090Z | sentinel-2a | sentinel-2 | [msi] | 4.800238 | 32633 | 33 | U | XU | ... | S2A_OPER_MSI_L2A_DS_ATOS_20220816T162557_S2022... | S2A_OPER_MSI_L2A_TL_ATOS_20220816T162557_A0373... | 0.974147 | 2022-08-16T10:16:12.609000Z | 0 | s3://sentinel-cogs/sentinel-s2-l2a-cogs/33/U/X... | roda-sentinel2/workflow-sentinel2-to-stac/b5b7... | True | {'sentinel2-to-stac': '0.1.0'} | 2022-11-06T12:53:48.090Z |

| 2 | POLYGON ((16.49848 53.24020, 18.14175 53.20819... | 2022-11-06T13:12:58.202Z | sentinel-2a | sentinel-2 | [msi] | 8.775087 | 32633 | 33 | U | XU | ... | S2A_OPER_MSI_L2A_DS_ATOS_20220803T135155_S2022... | S2A_OPER_MSI_L2A_TL_ATOS_20220803T135155_A0371... | 0.970396 | 2022-08-03T10:06:14.409000Z | 0 | s3://sentinel-cogs/sentinel-s2-l2a-cogs/33/U/X... | roda-sentinel2/workflow-sentinel2-to-stac/6245... | True | {'sentinel2-to-stac': '0.1.0'} | 2022-11-06T13:12:58.202Z |

| 3 | POLYGON ((16.49848 53.24020, 18.14175 53.20819... | 2022-11-06T13:14:37.621Z | sentinel-2b | sentinel-2 | [msi] | 0.005511 | 32633 | 33 | U | XU | ... | S2B_OPER_MSI_L2A_DS_2BPS_20220719T113943_S2022... | S2B_OPER_MSI_L2A_TL_2BPS_20220719T113943_A0280... | 0.967885 | 2022-07-19T10:06:08.645000Z | 0 | s3://sentinel-cogs/sentinel-s2-l2a-cogs/33/U/X... | roda-sentinel2/workflow-sentinel2-to-stac/4f39... | True | {'sentinel2-to-stac': '0.1.0'} | 2022-11-06T13:14:37.621Z |

| 4 | POLYGON ((16.49848 53.24020, 18.14175 53.20819... | 2022-11-06T12:54:05.312Z | sentinel-2a | sentinel-2 | [msi] | 1.825375 | 32633 | 33 | U | XU | ... | S2A_OPER_MSI_L2A_DS_ATOS_20220714T175057_S2022... | S2A_OPER_MSI_L2A_TL_ATOS_20220714T175057_A0368... | 0.967507 | 2022-07-14T10:06:16.331000Z | 0 | s3://sentinel-cogs/sentinel-s2-l2a-cogs/33/U/X... | roda-sentinel2/workflow-sentinel2-to-stac/1cea... | True | {'sentinel2-to-stac': '0.1.0'} | 2022-11-06T12:54:05.312Z |

| 5 | POLYGON ((16.49848 53.24020, 17.39346 53.22558... | 2022-11-06T12:55:07.931Z | sentinel-2a | sentinel-2 | [msi] | 1.067175 | 32633 | 33 | U | XU | ... | S2A_OPER_MSI_L2A_DS_ATOS_20220627T162810_S2022... | S2A_OPER_MSI_L2A_TL_ATOS_20220627T162810_A0366... | 0.967975 | 2022-06-27T10:16:12.822000Z | 0 | s3://sentinel-cogs/sentinel-s2-l2a-cogs/33/U/X... | roda-sentinel2/workflow-sentinel2-to-stac/fd88... | True | {'sentinel2-to-stac': '0.1.0'} | 2022-11-06T12:55:07.931Z |

| 6 | POLYGON ((16.49848 53.24020, 18.14175 53.20819... | 2022-11-06T12:52:50.337Z | sentinel-2a | sentinel-2 | [msi] | 0.002495 | 32633 | 33 | U | XU | ... | S2A_OPER_MSI_L2A_DS_ATOS_20220624T143914_S2022... | S2A_OPER_MSI_L2A_TL_ATOS_20220624T143914_A0365... | 0.968339 | 2022-06-24T10:06:16.177000Z | 0 | s3://sentinel-cogs/sentinel-s2-l2a-cogs/33/U/X... | roda-sentinel2/workflow-sentinel2-to-stac/9b8a... | True | {'sentinel2-to-stac': '0.1.0'} | 2022-11-06T12:52:50.337Z |

| 7 | POLYGON ((16.49848 53.24020, 18.14175 53.20819... | 2022-11-06T13:16:38.195Z | sentinel-2b | sentinel-2 | [msi] | 7.491449 | 32633 | 33 | U | XU | ... | S2B_OPER_MSI_L2A_DS_2BPS_20220619T114329_S2022... | S2B_OPER_MSI_L2A_TL_2BPS_20220619T114329_A0276... | 0.969127 | 2022-06-19T10:06:08.087000Z | 0 | s3://sentinel-cogs/sentinel-s2-l2a-cogs/33/U/X... | roda-sentinel2/workflow-sentinel2-to-stac/58f8... | True | {'sentinel2-to-stac': '0.1.0'} | 2022-11-06T13:16:38.195Z |

8 rows × 42 columns

This proves useful for example when we want to visualize the cloud cover of our whole collection:

df["datetime"] = pd.to_datetime(df["datetime"])

ts = df.set_index("datetime").sort_index()["eo:cloud_cover"]

ts.plot(title="eo:cloud-cover")

<Axes: title={'center': 'eo:cloud-cover'}, xlabel='datetime'>

Let's explore the properties of one item. But first let's look for an item index with low cloud cover and low nodata.

df_filt = df.loc[(df['eo:cloud_cover'] <= 2) & (df['s2:nodata_pixel_percentage'] <= 10)]

df_filt

| geometry | created | platform | constellation | instruments | eo:cloud_cover | proj:epsg | mgrs:utm_zone | mgrs:latitude_band | mgrs:grid_square | ... | s2:datastrip_id | s2:granule_id | s2:reflectance_conversion_factor | datetime | s2:sequence | earthsearch:s3_path | earthsearch:payload_id | earthsearch:boa_offset_applied | processing:software | updated | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 3 | POLYGON ((16.49848 53.24020, 18.14175 53.20819... | 2022-11-06T13:14:37.621Z | sentinel-2b | sentinel-2 | [msi] | 0.005511 | 32633 | 33 | U | XU | ... | S2B_OPER_MSI_L2A_DS_2BPS_20220719T113943_S2022... | S2B_OPER_MSI_L2A_TL_2BPS_20220719T113943_A0280... | 0.967885 | 2022-07-19 10:06:08.645000+00:00 | 0 | s3://sentinel-cogs/sentinel-s2-l2a-cogs/33/U/X... | roda-sentinel2/workflow-sentinel2-to-stac/4f39... | True | {'sentinel2-to-stac': '0.1.0'} | 2022-11-06T13:14:37.621Z |

| 4 | POLYGON ((16.49848 53.24020, 18.14175 53.20819... | 2022-11-06T12:54:05.312Z | sentinel-2a | sentinel-2 | [msi] | 1.825375 | 32633 | 33 | U | XU | ... | S2A_OPER_MSI_L2A_DS_ATOS_20220714T175057_S2022... | S2A_OPER_MSI_L2A_TL_ATOS_20220714T175057_A0368... | 0.967507 | 2022-07-14 10:06:16.331000+00:00 | 0 | s3://sentinel-cogs/sentinel-s2-l2a-cogs/33/U/X... | roda-sentinel2/workflow-sentinel2-to-stac/1cea... | True | {'sentinel2-to-stac': '0.1.0'} | 2022-11-06T12:54:05.312Z |

| 6 | POLYGON ((16.49848 53.24020, 18.14175 53.20819... | 2022-11-06T12:52:50.337Z | sentinel-2a | sentinel-2 | [msi] | 0.002495 | 32633 | 33 | U | XU | ... | S2A_OPER_MSI_L2A_DS_ATOS_20220624T143914_S2022... | S2A_OPER_MSI_L2A_TL_ATOS_20220624T143914_A0365... | 0.968339 | 2022-06-24 10:06:16.177000+00:00 | 0 | s3://sentinel-cogs/sentinel-s2-l2a-cogs/33/U/X... | roda-sentinel2/workflow-sentinel2-to-stac/9b8a... | True | {'sentinel2-to-stac': '0.1.0'} | 2022-11-06T12:52:50.337Z |

3 rows × 42 columns

item = items[df_filt.index[0]]

item.geometry

{'type': 'Polygon',

'coordinates': [[[16.498475093400046, 53.240199174677954],

[18.141754296879448, 53.20819279121764],

[18.071664488869853, 52.22257539160585],

[16.464995307918354, 52.25346561204129],

[16.498475093400046, 53.240199174677954]]]}

item.datetime

datetime.datetime(2022, 7, 19, 10, 6, 8, 645000, tzinfo=tzutc())

item.properties

{'created': '2022-11-06T13:14:37.621Z',

'platform': 'sentinel-2b',

'constellation': 'sentinel-2',

'instruments': ['msi'],

'eo:cloud_cover': 0.005511,

'proj:epsg': 32633,

'mgrs:utm_zone': 33,

'mgrs:latitude_band': 'U',

'mgrs:grid_square': 'XU',

'grid:code': 'MGRS-33UXU',

'view:sun_azimuth': 157.993614061013,

'view:sun_elevation': 56.588942214785,

's2:degraded_msi_data_percentage': 0,

's2:nodata_pixel_percentage': 3e-06,

's2:saturated_defective_pixel_percentage': 0,

's2:dark_features_percentage': 0.004429,

's2:cloud_shadow_percentage': 0,

's2:vegetation_percentage': 64.467937,

's2:not_vegetated_percentage': 34.062394,

's2:water_percentage': 1.263951,

's2:unclassified_percentage': 0.195772,

's2:medium_proba_clouds_percentage': 7e-05,

's2:high_proba_clouds_percentage': 3e-06,

's2:thin_cirrus_percentage': 0.005438,

's2:snow_ice_percentage': 0,

's2:product_type': 'S2MSI2A',

's2:processing_baseline': '04.00',

's2:product_uri': 'S2B_MSIL2A_20220719T095559_N0400_R122_T33UXU_20220719T113943.SAFE',

's2:generation_time': '2022-07-19T11:39:43.000000Z',

's2:datatake_id': 'GS2B_20220719T095559_028033_N04.00',

's2:datatake_type': 'INS-NOBS',

's2:datastrip_id': 'S2B_OPER_MSI_L2A_DS_2BPS_20220719T113943_S20220719T095747_N04.00',

's2:granule_id': 'S2B_OPER_MSI_L2A_TL_2BPS_20220719T113943_A028033_T33UXU_N04.00',

's2:reflectance_conversion_factor': 0.967884952505288,

'datetime': '2022-07-19T10:06:08.645000Z',

's2:sequence': '0',

'earthsearch:s3_path': 's3://sentinel-cogs/sentinel-s2-l2a-cogs/33/U/XU/2022/7/S2B_33UXU_20220719_0_L2A',

'earthsearch:payload_id': 'roda-sentinel2/workflow-sentinel2-to-stac/4f39107c8fe71ec8f29d674a811d6c21',

'earthsearch:boa_offset_applied': True,

'processing:software': {'sentinel2-to-stac': '0.1.0'},

'updated': '2022-11-06T13:14:37.621Z'}

We can also take a look at the assets for the item. That is which bands are available.

item.assets.keys()

dict_keys(['aot', 'blue', 'coastal', 'granule_metadata', 'green', 'nir', 'nir08', 'nir09', 'red', 'rededge1', 'rededge2', 'rededge3', 'scl', 'swir16', 'swir22', 'thumbnail', 'tileinfo_metadata', 'visual', 'wvp', 'aot-jp2', 'blue-jp2', 'coastal-jp2', 'green-jp2', 'nir-jp2', 'nir08-jp2', 'nir09-jp2', 'red-jp2', 'rededge1-jp2', 'rededge2-jp2', 'rededge3-jp2', 'scl-jp2', 'swir16-jp2', 'swir22-jp2', 'visual-jp2', 'wvp-jp2'])

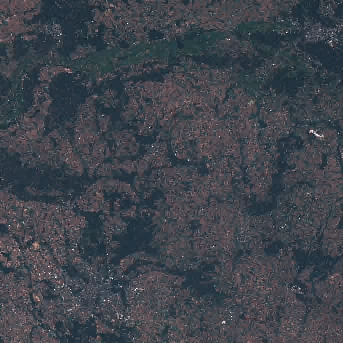

And we can also preview how this scene looks like:

thumbnail = item.assets["thumbnail"].href

Image(url = thumbnail)

Let's take a look at one single band:

asset = item.assets["red"]

asset.extra_fields

{'eo:bands': [{'name': 'red',

'common_name': 'red',

'description': 'Red (band 4)',

'center_wavelength': 0.665,

'full_width_half_max': 0.038}],

'gsd': 10,

'proj:shape': [10980, 10980],

'proj:transform': [10, 0, 600000, 0, -10, 5900040],

'raster:bands': [{'nodata': 0,

'data_type': 'uint16',

'bits_per_sample': 15,

'spatial_resolution': 10,

'scale': 0.0001,

'offset': -0.1}]}

And read it with rioxarray.

red = rioxarray.open_rasterio(item.assets["red"].href, decode_coords="all")

We can now plot the data, in this case a subset to speed up the process. That is achieved with the isel() function.

red.isel(x=slice(2000, 4000), y=slice(8000, 10500)).plot(robust=True)

<matplotlib.collections.QuadMesh at 0x7f8e44b78fd0>

What about an RGB representation?

rgb = rioxarray.open_rasterio(item.assets["visual"].href)

rgb.isel(x=slice(2000, 4000), y=slice(8000, 10500)).plot.imshow()

<matplotlib.image.AxesImage at 0x7f8e44c1a410>

footprint = gpd.read_file("../../data/poznan.geojson")

footprint.total_bounds

array([16.75738404, 52.29364398, 17.16361743, 52.53564773])

cube = stackstac.stack(

items,

resolution=200,

bounds_latlon=footprint.total_bounds,

resampling=Resampling.bilinear

)

cube

/opt/conda/lib/python3.11/site-packages/stackstac/prepare.py:364: UserWarning: The argument 'infer_datetime_format' is deprecated and will be removed in a future version. A strict version of it is now the default, see https://pandas.pydata.org/pdeps/0004-consistent-to-datetime-parsing.html. You can safely remove this argument. times = pd.to_datetime(

<xarray.DataArray 'stackstac-26bf16b18d945f83e54e4d04b774ec18' (time: 8,

band: 32,

y: 140, x: 143)>

dask.array<fetch_raster_window, shape=(8, 32, 140, 143), dtype=float64, chunksize=(1, 1, 140, 143), chunktype=numpy.ndarray>

Coordinates: (12/52)

* time (time) datetime64[ns] 2022-06-19...

id (time) <U24 'S2B_33UXU_20220619_...

* band (band) <U12 'aot' ... 'wvp-jp2'

* x (x) float64 6.19e+05 ... 6.474e+05

* y (y) float64 5.823e+06 ... 5.795e+06

s2:nodata_pixel_percentage (time) object 0 0 ... 60.458738

... ...

title (band) <U31 'Aerosol optical thi...

gsd (band) object None 10 ... None None

common_name (band) object None 'blue' ... None

center_wavelength (band) object None 0.49 ... None

full_width_half_max (band) object None 0.098 ... None

epsg int64 32633

Attributes:

spec: RasterSpec(epsg=32633, bounds=(619000, 5795000, 647600, 5823...

crs: epsg:32633

transform: | 200.00, 0.00, 619000.00|\n| 0.00,-200.00, 5823000.00|\n| 0...

resolution: 200We can further wrangle this cube by selecting only RGB bands and creating monthly composites. We can achieve this with xarray resample. This are all the time range formats supported.

rgb = cube.sel(band=["red", "green", "blue"])

monthly = rgb.resample(time="MS").median("time", keep_attrs=True)

monthly

<xarray.DataArray 'stackstac-26bf16b18d945f83e54e4d04b774ec18' (time: 3,

band: 3,

y: 140, x: 143)>

dask.array<stack, shape=(3, 3, 140, 143), dtype=float64, chunksize=(1, 3, 140, 143), chunktype=numpy.ndarray>

Coordinates: (12/26)

* band (band) <U12 'red' 'green' 'blue'

* x (x) float64 6.19e+05 ... 6.474e+05

* y (y) float64 5.823e+06 ... 5.795e+06

earthsearch:boa_offset_applied bool True

proj:epsg int64 32633

s2:saturated_defective_pixel_percentage int64 0

... ...

gsd (band) object 10 10 10

common_name (band) object 'red' 'green' 'blue'

center_wavelength (band) object 0.665 0.56 0.49

full_width_half_max (band) object 0.038 0.045 0.098

epsg int64 32633

* time (time) datetime64[ns] 2022-06-01...

Attributes:

spec: RasterSpec(epsg=32633, bounds=(619000, 5795000, 647600, 5823...

crs: epsg:32633

transform: | 200.00, 0.00, 619000.00|\n| 0.00,-200.00, 5823000.00|\n| 0...

resolution: 200We will use the compute() function from dask to read our object in-memory.

monthly = monthly.compute()

This will make plotting tasks faster.

monthly.plot.imshow(

col="time",

col_wrap=3,

rgb="band",

robust=True,

size=4,

add_labels=False,

)

<xarray.plot.facetgrid.FacetGrid at 0x7f8e507dbd50>

Let's take a look now at a smaller area to visualize crops around Poznan.

crops = gpd.read_file("../../data/crops.geojson")

cube = stackstac.stack(

items,

resolution=10,

bounds_latlon=crops.total_bounds,

resampling=Resampling.bilinear

)

rgb = cube.sel(band=["red", "green", "blue"])

rgb

/opt/conda/lib/python3.11/site-packages/stackstac/prepare.py:364: UserWarning: The argument 'infer_datetime_format' is deprecated and will be removed in a future version. A strict version of it is now the default, see https://pandas.pydata.org/pdeps/0004-consistent-to-datetime-parsing.html. You can safely remove this argument. times = pd.to_datetime(

<xarray.DataArray 'stackstac-34b7fdf5d830fa45909152427d7b3f8a' (time: 8,

band: 3,

y: 748, x: 1239)>

dask.array<getitem, shape=(8, 3, 748, 1239), dtype=float64, chunksize=(1, 1, 748, 1024), chunktype=numpy.ndarray>

Coordinates: (12/52)

* time (time) datetime64[ns] 2022-06-19...

id (time) <U24 'S2B_33UXU_20220619_...

* band (band) <U12 'red' 'green' 'blue'

* x (x) float64 6.328e+05 ... 6.452e+05

* y (y) float64 5.809e+06 ... 5.801e+06

s2:nodata_pixel_percentage (time) object 0 0 ... 60.458738

... ...

title (band) <U31 'Red (band 4) - 10m'...

gsd (band) object 10 10 10

common_name (band) object 'red' 'green' 'blue'

center_wavelength (band) object 0.665 0.56 0.49

full_width_half_max (band) object 0.038 0.045 0.098

epsg int64 32633

Attributes:

spec: RasterSpec(epsg=32633, bounds=(632780, 5801330, 645170, 5808...

crs: epsg:32633

transform: | 10.00, 0.00, 632780.00|\n| 0.00,-10.00, 5808810.00|\n| 0.0...

resolution: 10For a quick view, we can generate a GIF of the Sentinel-2 scenes we have available.

gif_crops = geogif.dgif(rgb).compute()

/opt/conda/lib/python3.11/site-packages/geogif/gif.py:190: RuntimeWarning: invalid value encountered in cast

u8 = (data * 255).astype("uint8")

gif_crops

We can work on derived calculation, for example, we can compute the NDVI per scene

nir, red = cube.sel(band="nir"), cube.sel(band="red")

ndvi = (nir - red) / (nir + red)

ndvi

<xarray.DataArray 'stackstac-34b7fdf5d830fa45909152427d7b3f8a' (time: 8,

y: 748, x: 1239)>

dask.array<truediv, shape=(8, 748, 1239), dtype=float64, chunksize=(1, 748, 1024), chunktype=numpy.ndarray>

Coordinates: (12/47)

* time (time) datetime64[ns] 2022-06-19...

id (time) <U24 'S2B_33UXU_20220619_...

* x (x) float64 6.328e+05 ... 6.452e+05

* y (y) float64 5.809e+06 ... 5.801e+06

s2:nodata_pixel_percentage (time) object 0 0 ... 60.458738

s2:not_vegetated_percentage (time) float64 10.43 11.0 ... 34.91

... ...

grid:code <U10 'MGRS-33UXU'

s2:processing_baseline <U5 '04.00'

created (time) <U24 '2022-11-06T13:16:38...

raster:bands object [{'nodata': 0, 'data_type...

gsd object 10

epsg int64 32633Let's create a composite with the maximum NDVI value for the whole collection over time.

ndvi_comp = ndvi.max("time")

ndvi_comp

<xarray.DataArray 'stackstac-34b7fdf5d830fa45909152427d7b3f8a' (y: 748, x: 1239)>

dask.array<_nanmax_skip-aggregate, shape=(748, 1239), dtype=float64, chunksize=(748, 1024), chunktype=numpy.ndarray>

Coordinates: (12/20)

* x (x) float64 6.328e+05 ... 6.452e+05

* y (y) float64 5.809e+06 ... 5.801e+06

earthsearch:boa_offset_applied bool True

proj:epsg int64 32633

s2:saturated_defective_pixel_percentage int64 0

s2:datatake_type <U8 'INS-NOBS'

... ...

mgrs:utm_zone int64 33

grid:code <U10 'MGRS-33UXU'

s2:processing_baseline <U5 '04.00'

raster:bands object [{'nodata': 0, 'data_type...

gsd object 10

epsg int64 32633ndvi_comp = ndvi_comp.compute()

ndvi_comp.plot(vmin=0, vmax=0.8, cmap="YlGn")

<matplotlib.collections.QuadMesh at 0x7f8e50bb8f10>

And finally, let's compute the NDVI anomaly, i.e., how much does each pixel from the composite deviates from the mean of the whole collection.

anomaly = ndvi_comp - ndvi.mean()

anomaly = anomaly.compute()

anomaly.plot(cmap="PiYG")

<matplotlib.collections.QuadMesh at 0x7f8e50b10fd0>

Downloading the data¶

There might be at some point the need to download the scenes that you just queried. To do so you can use the os and urllib modules that are part of the python standard library as the code snippet below shows. This will download all of the items from your search, so make sure you apply enough filtering so that you don't download data that you don't need.

download_path = "path/to/dir"

for item in items:

download_path = os.path.join(item.collection_id, item.id)

if not os.path.exists(download_path):

os.makedirs(download_path, exist_ok=True)

for name, asset in item.assets.items():

urllib.request.urlretrieve(asset.href,

os.path.join(download_path,

os.path.basename(asset.href)))

Exercises¶

- Adjust your STAC query accordingly and create a new data cube grouped by season. Think about data sizes and play with an AOI of your choice.

- Compute a time series of NDVI values for one crop parcel of your choice. Hint: you can easily create a geojson polygon with https://geojson.io/. Take the temporal grouping of your choice, but what would make sense to compare such vegetation values?

More resources¶

This material was based on the Carpentries introduction to Geospatial RAster and Vector data in Python and the EGU23 short course on the same topic by Francesco Nattino and Ou Ku.